For a long time, adopting AI was easy.

You picked an API. You shipped a feature. You paid the bill.

But as AI usage grows, across customer support, internal tools, analytics, and products, many teams are realising something uncomfortable:

AI isn’t just a tool anymore.

It’s becoming infrastructure.

And infrastructure decisions age quickly if they’re made casually.

This is why more founders and operators are exploring private AI setups powered by open or open-weight models. Not because they hate cloud APIs, but because they want control, predictability, and long-term flexibility.

The question is no longer “Which AI model is best?”

It’s “Which model fits how our business actually operates?”

This guide breaks down the five open-source models businesses are most commonly evaluating today, and how to choose between them without locking yourself into the wrong path.

Why Businesses Are Moving Toward Private AI

Private AI setups aren’t about chasing novelty. They’re a response to very real operational pressure.

As AI adoption deepens, teams start running into:

- Unpredictable inference costs

- Sensitive data flowing outside their infrastructure

- Limited control over model behaviour

- AI logic scattered across products, tools, and teams

A private AI approach changes the trade-offs:

- Costs become more predictable

- Data stays internal

- Models can be customised to your domain

- AI becomes a system, not a subscription

Open-source and open-weight models are what make this possible, but each one optimises for a different kind of business reality.

The Five Models Most Teams Are Actually Considering

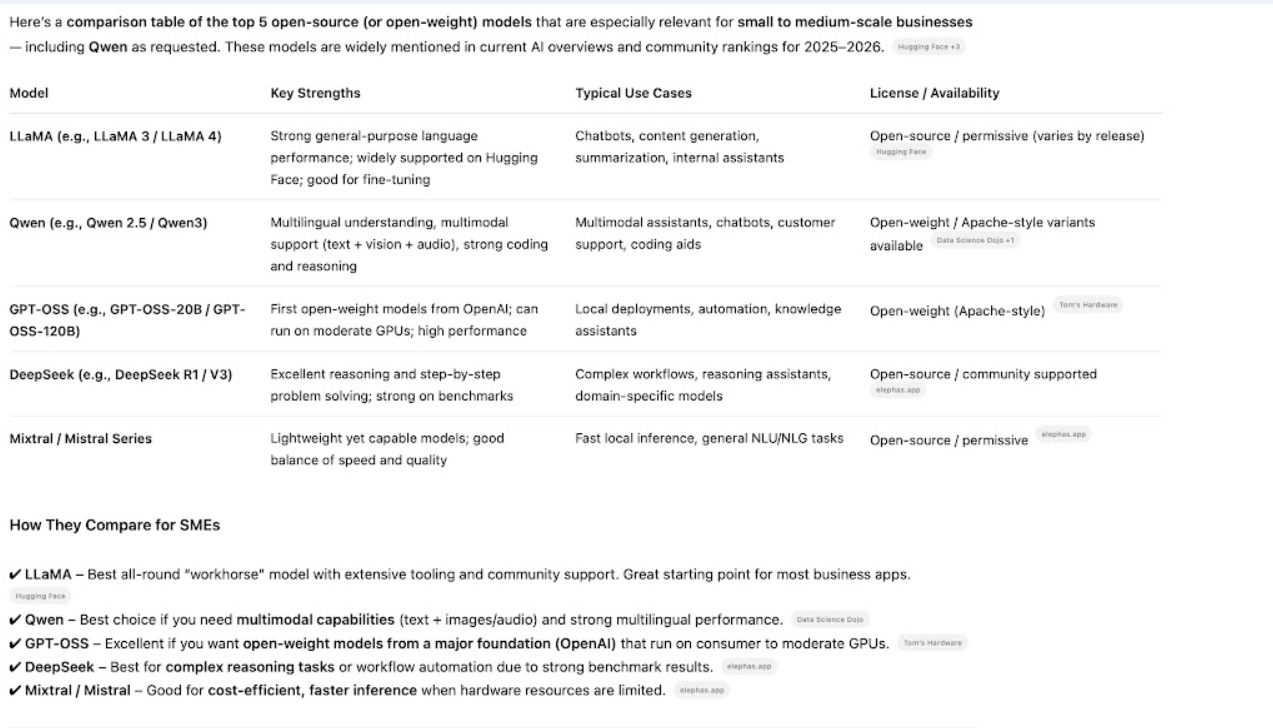

Before diving deeper, here’s the honest landscape:

Each serves a different role. Let’s break them down properly.

LLaMA: A Strong Foundation for Custom AI Systems

LLaMA is best understood as a foundation, not a finished product.

It doesn’t try to be the most polished assistant out of the box. Instead, it’s designed to be adaptable, which is exactly why it has become the backbone of many private AI stacks.

Why teams choose LLaMA

- Large and mature ecosystem

- Strong support for fine-tuning and retrieval-augmented generation (RAG)

- Works well across multiple model sizes

Where it fits best

- Internal knowledge assistants

- Workflow automation

- AI is embedded deeply into SaaS products

- Long-term, evolving AI platforms

The trade-off

LLaMA usually requires additional work, such as tuning, retrieval layers, or orchestration, to shine.

Choose LLaMA if

You’re building AI as infrastructure, not just a feature.

Mistral: When Efficiency Matters More Than Model Size

Mistral has earned attention for one simple reason: it delivers strong performance without demanding massive compute.

For many teams, that trade-off matters more than chasing the largest model possible.

Why businesses adopt Mistral

- Excellent performance for mid-sized models

- Lower latency and faster inference

- Easier to run reliably in production

Common use cases

- Customer support automation

- Document summarization

- High-usage internal assistants

- AI features are used continuously throughout the day

Choose Mistral if

You care about performance per GPU hour, not just benchmark headlines.

Qwen: Designed for Multilingual and Multimodal Reality

Many AI systems fail quietly outside English-only, text-only environments.

Qwen is built with that reality in mind.

What sets Qwen apart

- Strong multilingual performance

- Native support for text + image workflows

- Enterprise-oriented design choices

Where it works best

- Global customer support systems

- Document and image understanding

- Visual content analysis

- Products serving non-English or Asian markets

The trade-off

Multimodal capabilities come with higher infrastructure complexity.

Choose Qwen if

Your AI needs to read, see, and understand across languages and formats.

GPT-OSS: GPT-Like Experience Without the Lock-In

GPT-OSS models exist for teams that like the style of GPT, but not the dependency.

They focus on:

- Instruction following

- Conversational clarity

- Familiar assistant-style interactions

Where they fit well

- Internal copilots

- Sales and marketing assistants

- Content drafting tools

- Teams migrating off proprietary APIs

What to expect

They may not match Frontier's proprietary models, but they’re more than capable for real business workflows.

Choose GPT-OSS if

Your team wants GPT-like behaviour with private control.

DeepSeek: Built for Thinking, Not Just Talking

DeepSeek stands out for one reason: reasoning depth.

It’s less focused on personality and more focused on structured thinking.

Why do technical teams like it

- Strong performance in math and logic

- Excellent for code generation and review

- More “analyst” than “assistant”

Best-fit scenarios

- Engineering support

- Financial modeling

- Data analysis

- Technical decision-making

Choose DeepSeek if

Your AI needs to reason carefully, not just respond fluently.

How to Choose Without Over-Optimising the Decision

Instead of asking “Which model is best?”, ask:

What role should AI play inside our business?

- Core AI infrastructure → LLaMA or Mistral

- High-volume operational AI → Mistral

- Global or multimodal workflows → Qwen

- GPT replacement for teams → GPT-OSS

- Analytical or technical reasoning → DeepSeek

Many strong private AI systems don’t rely on one model.

They use one general model and one specialist, orchestrated together.

How Pardy Panda Studios Helps Teams Choose (and Switch) Safely

At Pardy Panda Studios, we don’t start with model recommendations.

We start with how AI fits into your business.

Most teams ask whether they should use DeepSeek, GPT-OSS, LLaMA, Mistral, or Qwen.

But the real risk usually isn’t the model. It’s the system around it.

Our work typically involves:

- Mapping where AI logic lives today

- Designing model-agnostic workflows

- Reducing infrastructure and operational complexity

- Making future model swaps boring and low-risk

Sometimes LLaMA is the right foundation.

Sometimes Mistral or Qwen fits better.

Sometimes DeepSeek or GPT-OSS solves a very specific problem.

And often, the real fix has nothing to do with the model at all.

If you’re considering a private AI setup or already running one that feels heavier than expected, it’s worth slowing down before locking in decisions.

Schedule a clarity call with Pardy Panda Studios to:

- Evaluate which model actually fits your use cases

- Identify hidden operational and cost risks

- Design a private AI system that stays flexible as models evolve

No hype. No vendor bias. Just a clear path forward.

Below is a practical comparison table of DeepSeek, GPT-OSS, LLaMA, Mistral, and Qwen, covering performance, cost, infrastructure needs, and ideal use cases, to help you evaluate them side by side.

FAQs

Is one open-source AI model clearly better than the others?

No. Each model excels in different operational contexts. “Best” depends on how it’s deployed, maintained, and integrated.

Can we switch models later if we choose the wrong one?

Yes, if your system is designed properly. Model-agnostic architecture makes switching far easier.

Do we need a large ML team to run private AI?

Not necessarily. Many teams run private AI successfully with small engineering teams, as long as ops and ownership are clear.

Is private AI always cheaper than API-based AI?

Not always. Cost savings depend on usage patterns, infrastructure choices, and system design.

.png)